Difference between revisions of "License Clearance Tool - Description and Documentation"

| Line 53: | Line 53: | ||

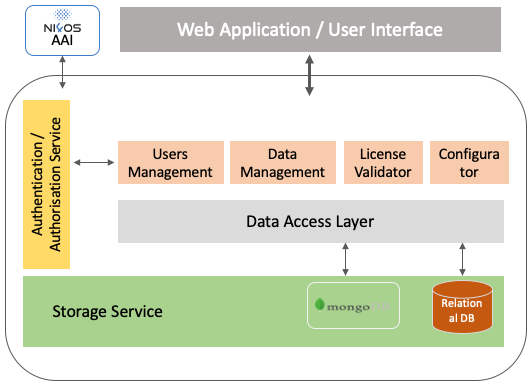

The image below presents the block diagram of the application. Some components have not been yet implemented, but will be available during the next versions of it. | The image below presents the block diagram of the application. Some components have not been yet implemented, but will be available during the next versions of it. | ||

| − | [[File:Ni4os-license-clearance-block- | + | [[File:Ni4os-license-clearance-block-diagram_cropped.png|left|upright=1.0|License clearance block-diagram]] |

====Schema Description==== | ====Schema Description==== | ||

Revision as of 10:32, 1 July 2020

Intro - Aim & purpose of the tool

This tool is the output for Task T4.4 that aims to mainstream concrete certification standards, tools and mechanisms for open research data management and certification schemes for data repositories, aligning with activities and results of INFRAEOSC-5c. It targets both data intensive RDM (through cloud compute) and the curation and handling of the long tail of science with the aim of establishing consolidated models of RDM activities in the target countries. Building on the work undertaken in T4.2 and T4.3, this task delivers a certification tool for supporting trustworthiness of data repositories and ensuring their FAIRness in a standardised and interoperable fashion, with the objective to support the onboarding of repositories to EOSC.

Newly produced datasets, but mostly the creation of derivative data works, i.e. for purposes like content creation, service delivery or process automation, is often accompanied by legal uncertainty about usage rights and high costs in the clearance of licensing issues. NI4OS-Europe, with the "Dataset License Clearance Tool" (DLCT) aims to facilitate and automate the clearance of rights (copyright) for datasets that are to be cleared before they are publicly released under an open licence and/or stored at a publicly trusted FAIR repository. It will additionally allow the crowd-sourced documentation of the clearance process. Main aspects of the tool include:

- Data clearance not bound to the user that initiated the procedure, so it can be started and finished by different users.

- Potentially include crowd-source clearance.

- Clearance metadata will be an open-source resource.

- Provides equivalence, similarity and compatibility between licenses if used in combination, particularly for derivative works.

Targeted use

The intended use of the tool is to provide a guided approach for establishing the proper open-source licence required for the creation of a new (or synthetic) dataset, or for the re-use of an existing unlicensed one. The procedure takes into account many potential data managers (users initiating or completing a data clearance procedure). Potential users may be researchers and research organisations.

Background work: Legal aspects

The Dataset License Clearance Tool (DLCT) comes as a respond to the increased demand for providing technical solutions to address legal aspects in FAIR and ORDM. It is, thus, intended to support researchers to publish in FAIR/open modes. As such, the tool development has been preceded by extended legal search and analysis of most used licensing schemes. These have been put in a matrix, so as to allow the comparison "all with all" and unveil compatible and conflicting licenses. The first version of the tool provides guidance for existing standard open-source licenses only. The matrix, however, is continuously expanded and also includes custom licenses. The comparison of "standard to custom" and "custom to custom" poses a challenge for both: the analysis of compatibility of licenses, but also the technical deployment of the solution. For this reason the results of this activity are not included in the "proof of concept" version of the tool, but will be implemented in the following ones.

Workflows

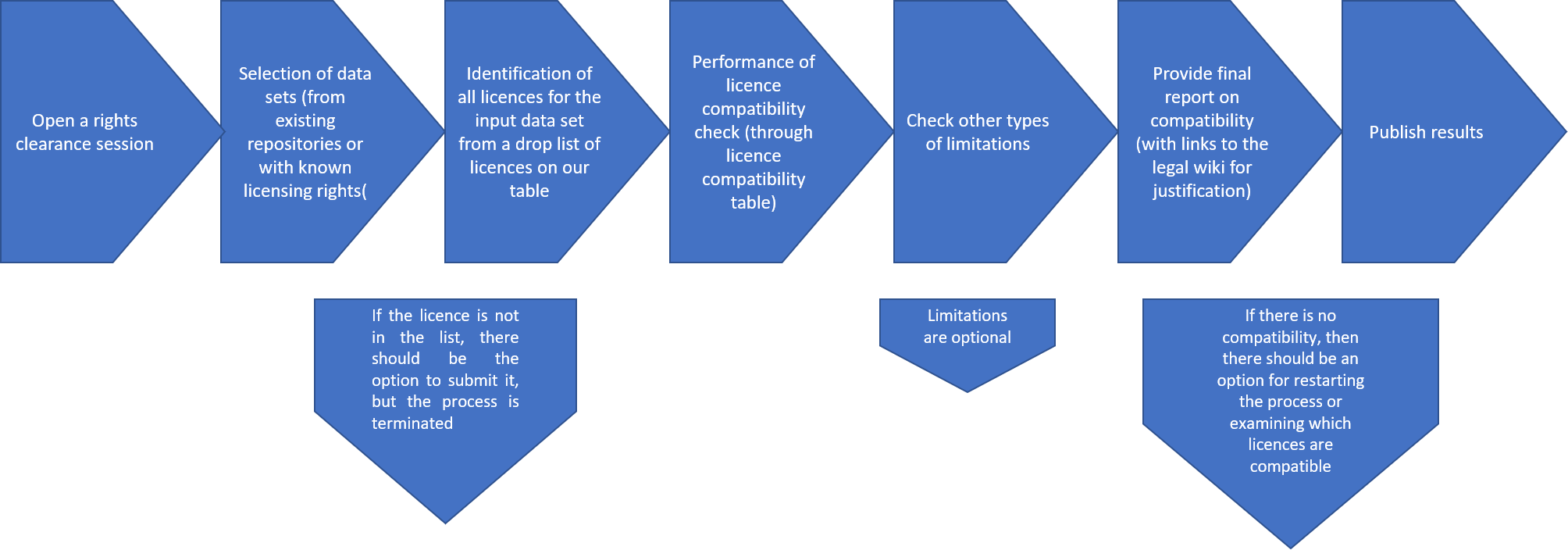

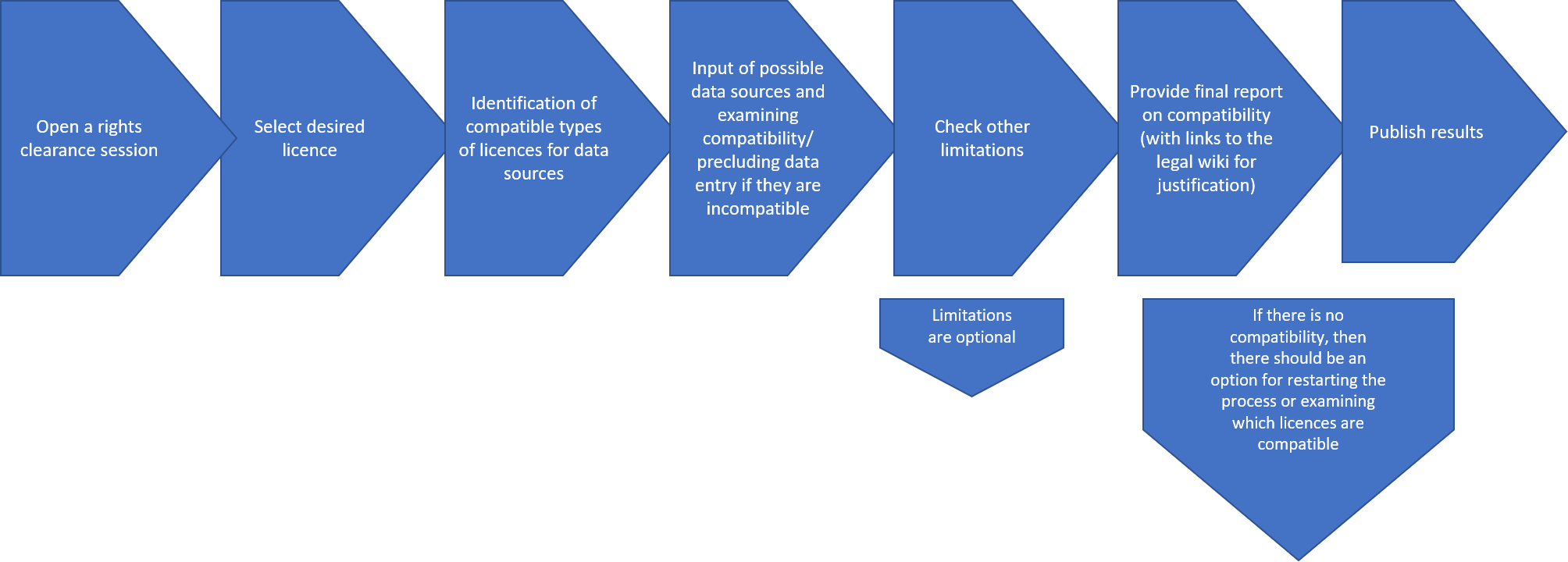

Two main workflows are foreseen. These are following the two possible usage scenarios the application covers and considered to be the most needed. According to the first scenario, a user (data manager) aims to associate an appropriate open-source licence for an existing dataset currently without a license or a derivative dataset combining licenses from existing licensed or not datasets. The associated workflow, Workflow I in the flowchart below, describes the procedure designed in the tool to answer the first usage scenario. The steps followed are:

- Start a new Rights Clearance Procedure. A user must initiate a new process for a specific dataset. This process is bound to the dataset, but not the user, which means that a different user may finish the process (or alter it).

- Existing dataset(s) selection. These are the initial datasets that may be existing or future derivative work whose rights are to be cleared or require a compatible license.

| Workflow I: From data sets to Rights clearance |

|

| Workflow II: From desired licence to the data-set |

|

Overall Architecture

The Dataset License Clearance Application at its core contains a schema description that corresponds to the data need to be filled in for processing a clearance application. This description constitutes of a number of sections and questions, in order to provide all the needed information about the new, generated dataset and the existing exploited datasets (from which the new one is derived), including descriptive metadata and the available/desired licenses. It also defines the ordering of the sections and questions, any dependencies among them and the vocabularies used to fill in possible answers. This description will evolve during time and be enhanced with additional questions. Aiming on being flexible, we map all the questions, the sections they belong to and the possible list of responses (if any) into a json (https://www.json.org/json-en.html) document, that is stored into a NoSQL Database, MongoDB (https://www.mongodb.com/). This schema is retrieved by the front-end application, which dynamically creates a form-based wizard.

Components

The application is composed by two main services, the back-end service and the front-end application. The back-end service is responsible for implementing all the business logic of the application and providing all the necessary methods to the front-end one, for interacting with it and making available to the end users the User Interface for clearing the license of a new dataset. The back-end service is composed by a number of different components, each one of them responsible for a different part of the system. Currently these are:

- a user’s management component for managing the available users and their roles,

- the data management component for managing all the data and interacting with the data access layer,

- the configurator component for manipulating the schema description and

- the license validator component.

Data are stored either on a NoSQL database or to a relational one. The image below presents the block diagram of the application. Some components have not been yet implemented, but will be available during the next versions of it.

Schema Description

The structure of the schema description is presented below, using an example. Each section is described in a separate section.

Section Description

"id": "s01",

"name": "DataSet Information",

"description": "Information about the generated dataset",

"order": 1,

"mandatory": true,

"acceptsMultiple": false

The fields above describe a section. Each section contains:

- Id: The id of the section. For internal use only

- Name: A name to be displayed to the end user

- Description: A description to be displayed to the end user

- Order: The order of this section, compared to the other sections

- AcceptsMultiple: A field that indicates if the user could add multiple answers in this section.

Question Description

"id": "q013",

"name": "Dataset type",

"description": "Select the type of the datasource",

"sectionId": "s02",

"order": 7,

"mandatory": true,

"responseType": "DropDown",

"dependingQuestionId": "q011",

"public": true,

"vocabularyId": "v001"

Each question contains the following fields:

- Id: The id of the question. For internal use only

- Name: The name to be displayed to the end user

- Description: The description to be displayed to the end user

- SectionId: The section it belongs to

- Order: The order of this questions in the section it belongs to

- Mandatory: If the question must be answered or not

- responseType: The type of the expected response. Currently we support: ShortText, Text, Checkbox (for Boolean questions), FileUpload, * * DropDown (responses are limited to a specific list)

- public: If this response will be public or not

- vocabularyId: valid only for responseType: DropDown. An ID to the vocabulary from which the responses will be used.

- DependingQuestionId: if the responses for this question depend on the response of a previous question.

Vocabulary Description

"id":"v003",

"name":"Hardware Licenses",

"description":"Hardware Licenses",

"terms":[

{

"id":"v003_001",

"name": "CERN Open Hardware License"

},

{

"id":"v003_002",

"name": "Simputer General"

},

{

"id":"v003_003",

"name": "TAPR Open Hardware License"

}

]

Each vocabulary constitutes of an Id, a name and a description and a definite number of terms. Each term has an Id and a name / label.

Back-end Service

A REST web service is implemented using the Java EE and the Spring Boot framework. The service offers an API (Application Programmatic Interface) with all the required methods for serving the application’s needs.

Front-end Application

The front-end application is implemented using Angular (https://angular.io/), a TypeScript-based open-source web application framework. In its first version it offers a draft version of the dataset license clearance form wizard. The wizard is created at runtime, supporting the dynamic schema description presented above.

Version features

- Initial version provides a proof-of-concept workflow.

- Will initially provide guidance for existing standard open-source licenses only.